10 Searching with language models: A localised approach

Martin Steer

Large Language Models (LLMs) are increasingly replacing traditional search engines in everyday use. Instead of ‘Let’s ask Google,’ people now converse with systems like ChatGPT or Perplexity.ai, benefiting from their ability to handle natural language queries and generate clear, conversational responses. However, these models have significant limitations: they frequently produce convincing but incorrect answers (often called ‘oddities’ rather than ‘hallucinations’), lack consistent source attribution, and raise concerns about bias, misinformation, and resource efficiency.

This chapter explores Retrieval Augmented Generation (RAG), a technique that addresses some of these challenges by giving language models access to verifiable external information sources during generation. Rather than solely relying on the models’ internal parameters, RAG aims to enhance language models by connecting them to external information sources, helping prevent fabricated responses by ‘grounding’ generated content in verifiable documents. This grounding process directly ties model responses to trustworthy factual sources outside its training data, improving accuracy and providing evidence for claims, ultimately reducing the chances of plausible-sounding but incorrect information.

While the RAG technology is not a perfect solution and is evolving rapidly as of 2025, it’s accessible even to beginners without programming knowledge. We’ll focus on a localised approach that extends the previous chapter’s text file searching capabilities, allowing you to search, retrieve, and summarise your personal document collection using local Machine Learning (ML) Language Models. The advantage of the method described here is not that it will eliminate hallucinations or oddities, but that it makes it much easier to cross-reference the results and check for yourself.

For this approach, you’ll need a reasonably capable computer with minimum 8GB RAM and 10-20GB free space (GPU optional but helpful). Using smaller local models has trade-offs compared to cloud-based LLMs like Claude, ChatGPT or CoPilot, which can be an important factor affecting the accuracy of responses (see below ‘Types of language models’ for more detail). Remember that all language models, large and small, remain non-deterministic and have strengths and weaknesses, so always assess and understand the model’s capabilities before you use it, and verify all outputs carefully.

Next, you should familiarise yourself with what models are, which models are available in GPT4All, and what you can do with them.

Types of language models

A model is a piece of software that’s been trained on lots of examples, allowing GPT4All to understand your queries and produce useful responses. A ‘model’ is basically an ‘AI’ or part of one.

In addition to the dimension of size for specific language models there are also different types. Here, type means multiple things: 1) the type of algorithms or architecture the model is built with, 2) the type of task the model was trained to do, and 3) the type which GPT4All lists as the model’s base name.

The type of algorithm a model uses is beyond the scope of this chapter, but just briefly, this relates to the machine learning computational architecture. Language models, which predict the next word in a sequence, are usually built using a transformer architecture, which was published by Google in 2017. Vision models, which are used to segment, classify or generate images, use variations of a Convolutional Neural Network (CNN) which has its technical origins in the ‘neocognitron’, introduced by Kunihiko Fukshima in 1980. Model Architecture is an active area of AI research and there is an ever increasing number of novel model architectures appearing in the literature.

The second type is the type of task an LM has been trained to perform, and each serves a different purpose. There are instructional models which excel at generating text based on given instructions or prompts, and are often used for tasks like text summarisation or classification. Chat-based models are designed to engage in natural sounding conversations with users, leveraging context and understanding of dialogue flows. Code generation models specialise in generating code snippets or entire programs based on input specifications, and are used for programmer coding assistance. More recently chain of thought and reasoning models have begun to appear, where tasks are internally decomposed into subtasks and subsequently recombined as the model processes and generates its response.

There are also embedding-based models, different in kind to the above models, and often consisting of dense semantic vectors which are built from the training data. Word Embeddings are a way for computers to represent, understand and work with words and concepts that have many simultaneous and/or overlapping meanings.

You’ll produce word embeddings in the next section, when you transform your corpus of text files and build your own RAG pipeline in GPT4All. So please prepare your own corpus of text files before continuing. Converting PDFs to text files is covered in the previous chapter and you could use those text files for this local RAG approach.

Using local language models in GPT4All

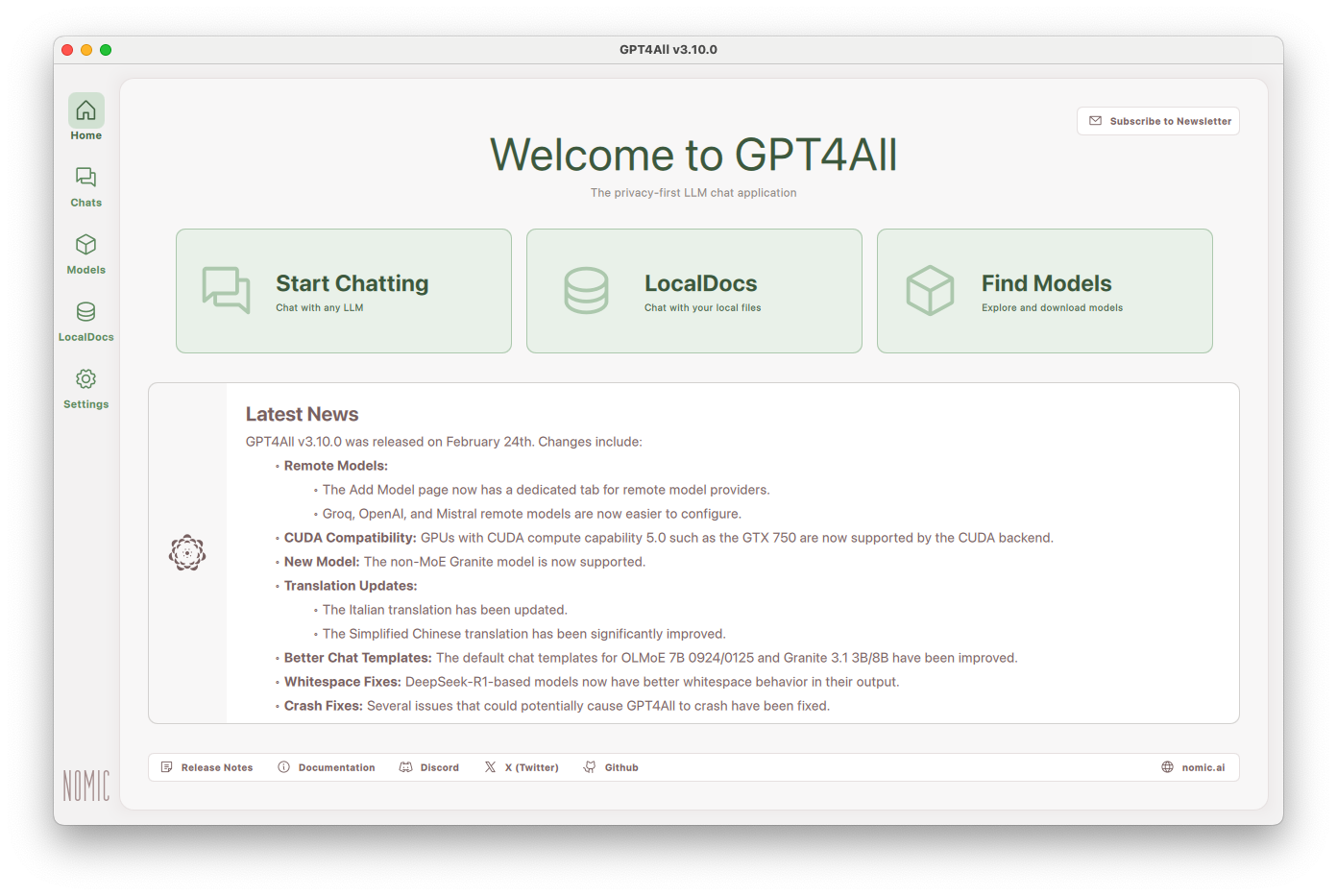

There are many different applications which you can install locally to set up your own RAG, but for simplicity we have chosen to use an application called GPT4All from a company called Nomic. It is open source, runs on Windows, Mac and Linux, easy to install and allows you to download a variety of different Language Models from inside the application. It’s a pretty basic user interface, but does the job simply and easily, which is very useful for getting started with the technology.

Follow the QuickStart instructions on their documentation site. It’s a matter of downloading the installer for your specific operating system, installing it and running the application. The main application window looks like this:

[screenshots accurate at time of publication, but given the rapidly evolving field, please be aware they may differ from what you use at the time you follow along with this chapter]

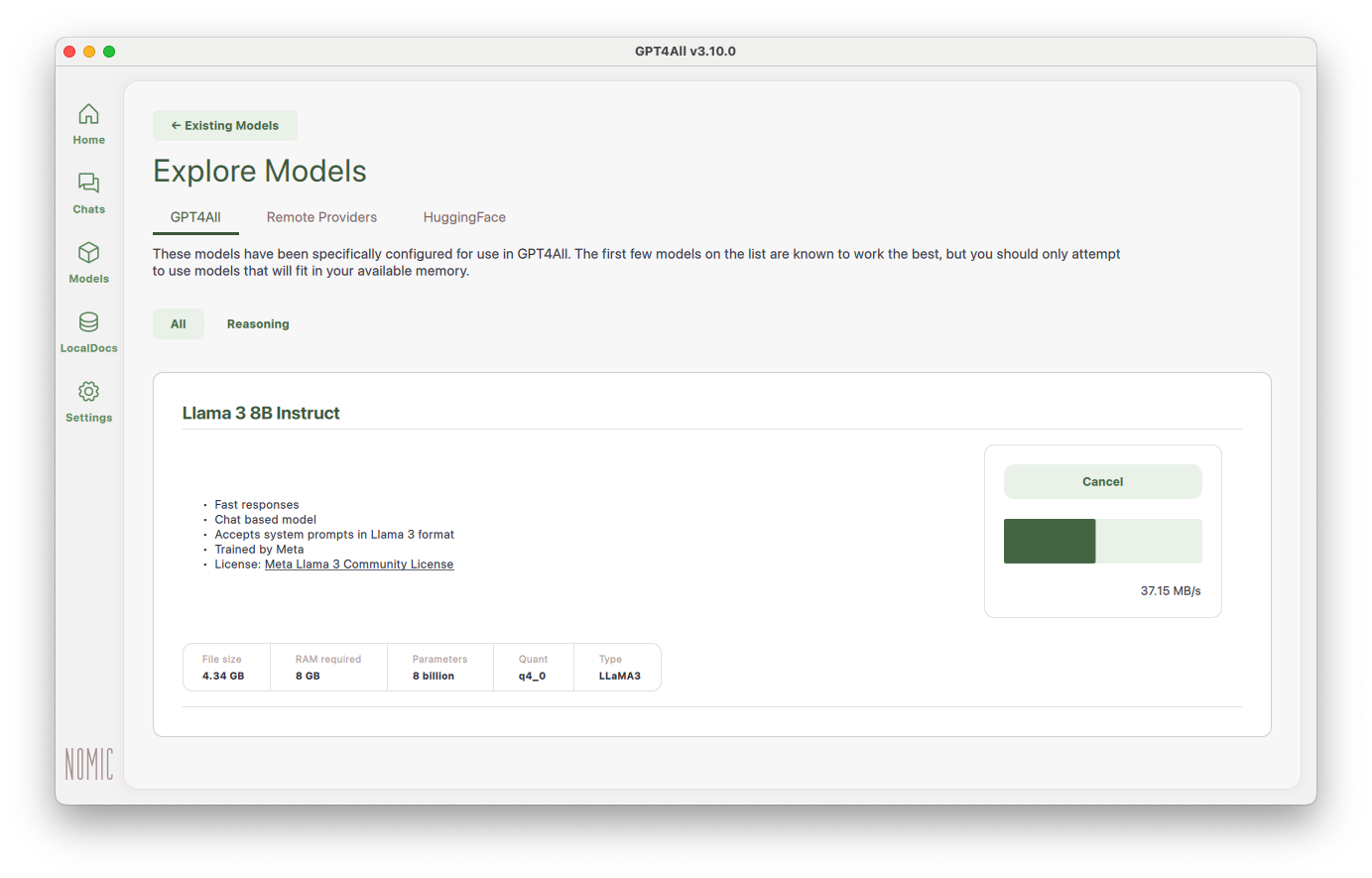

Click the green ‘Find models’ button on the right and explore the list. Nomic recommends starting with the ‘Llama3 Instruct’ model, which is a 4.34GB download and requires 8GB RAM to use.

If you know the name of a different model you want to use you can search it up. A company called HuggingFace has become the de facto storage platform for machine learning models and GPT4All will search HuggingFace and check compatibility of the models with your machine. See the GPT4All Models documentation page.

Now that you’re installing local language models, you will need to start to pay attention to the nomenclature of the models you’re using.

The size of the model can vary greatly and mostly, but not entirely, depends on the number of parameters. Some models have millions, while others have billions of parameters. Parameters store the learned representations of words and characters in the language model and determine its performance and computational requirements. Some models are relatively small and can fit on a mobile device, while others require significant storage space and RAM and may need to run in the cloud. GPT4All allows use of both.

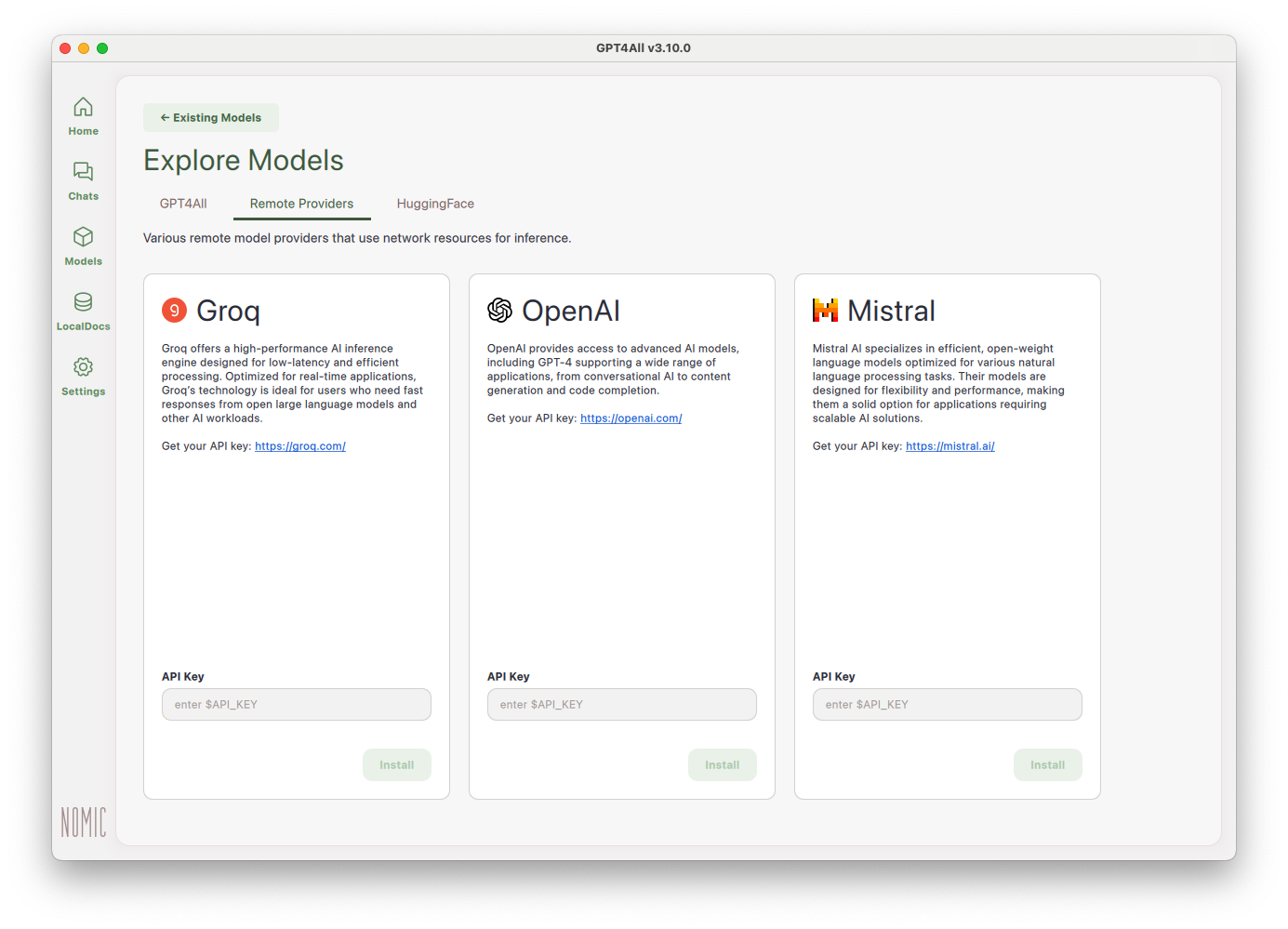

When you ‘add model’ you will see a download button for getting GPT4All’s preprepared local models, a tab for connecting to cloud/remote models (using an API_KEY) or a field to download specific other models from HuggingFace.

Download Llama3 8B Instruct, if you haven’t already.

First steps with Retrieval Augmented Generation (RAG)

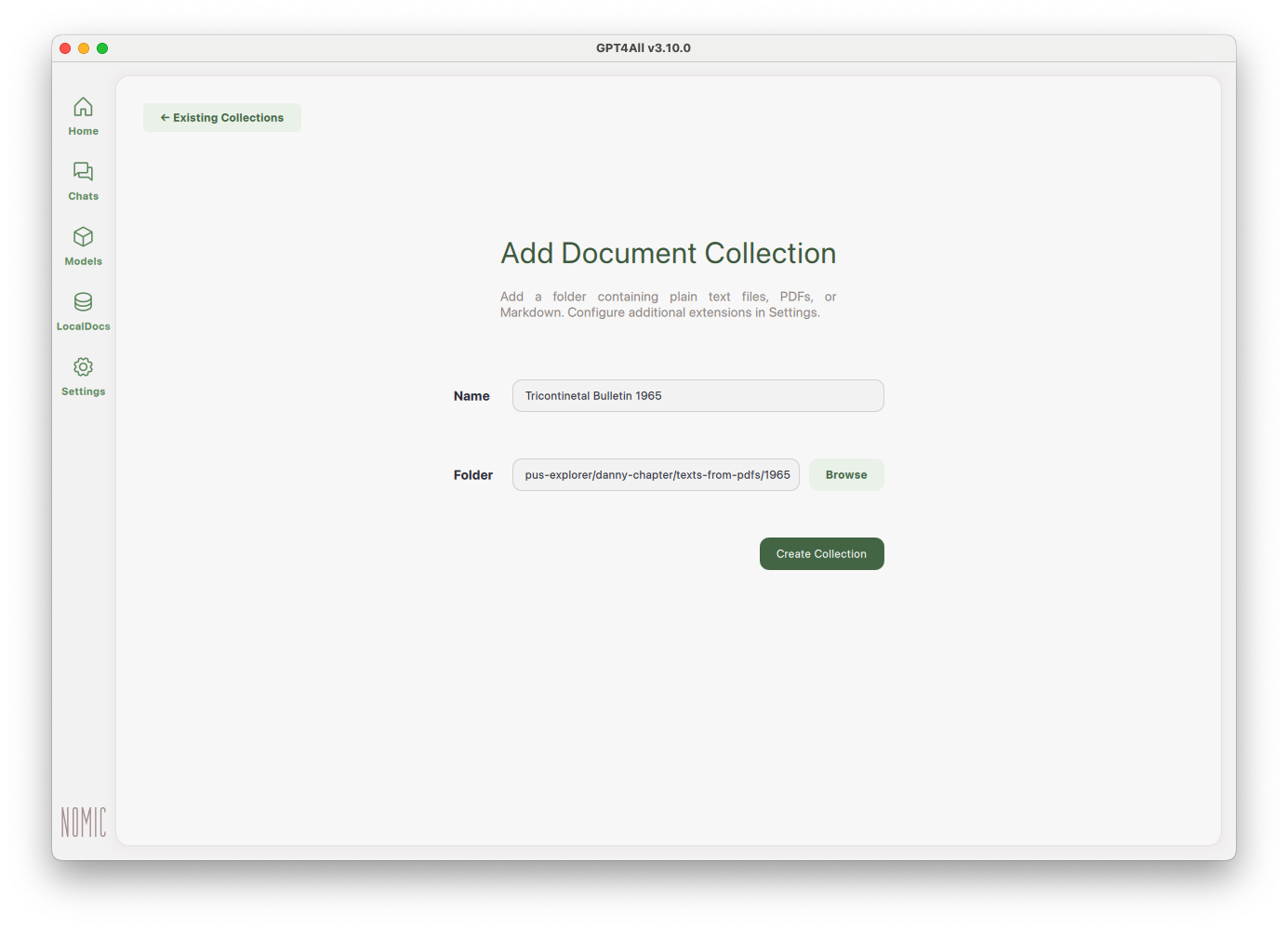

Let’s load your text corpus into GPT4All and generate their text embeddings. GPT4All calls these LocalDocs.

If you haven’t created the text files from the Tricontinental PDFs (see chapters by Karen Smith and Jonathan Blaney in this volume), you can use the text files we prepared earlier.

Download the example text files here: https://hdl.handle.net/10779/uos.28669670.v1

Go to the LocalDocs screen in your application and click the ‘Add Collection’ button in the top right. Give your collection a useful name, browse to the directory where your text files are stored and click ‘Create Collection’:

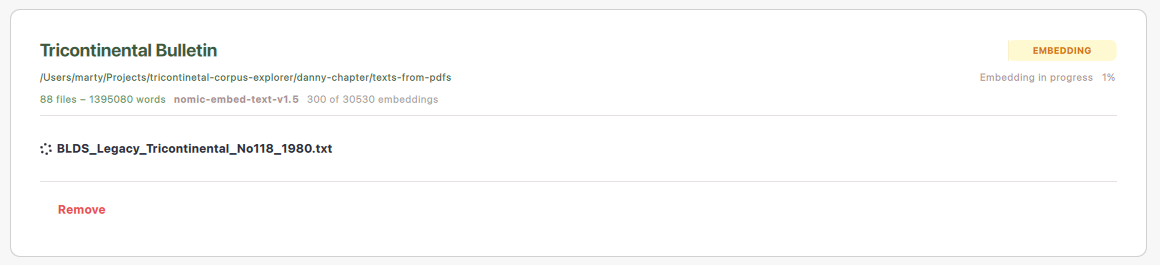

Once you create the collection, GPT4All scans the directory and begins embedding your files. This can take just minutes or up to several hours, especially if you have a lot of long text documents or an older computer. Just wait it out. If you haven’t previously downloaded the nomic-embed-text-v1.5 model this will automatically download first before embedding begins. On a 2020 model MacBook Air M1 it took about 13 minutes to embed 88 of the Tricontinental OCR text documents from 1965.

Once 100% complete, you will have a collection of word embeddings to use to ‘chat’ with your local documents.

Embedding space as a concept

Embeddings refer to the process of transforming input data into dense numerical vector representations that capture its semantic meanings. A specific model’s embeddings exist in the same high-dimensional space. Text embeddings can be aligned with image embeddings in a shared ‘latent space’, and the resultant modal is called a multimodal modal. This concept of latent spaces is crucial in machine learning and natural language processing (NLP), allowing models to compare semantic relationships between words, phrases, or even entire documents.

For example, suppose we have three terms: Vietnam, South Vietnam and Cambodia. An embedding model might create 2-dimensional vectors for each term like this:

‘Vietnam’ vector ≈ [0.5, 0.3]

‘South Vietnam’ vector ≈ [0.7, 0.2] (numerically similar to ‘Vietnam’)

‘Cambodia’ vector ≈ [-0.1, 0.6] (numerically different from ‘Vietnam’)

A model can now calculate how similar these words are by comparing how close their vectors are in 2-dimensional space. This is usually done using a cosine similarity function.

In real-world applications, word embeddings are typically represented in much higher dimensions (e.g., hundreds or thousands), providing a more nuanced representation of multiple semantic relationships. However, the nominal principle of ‘similarities based on proximities’ in the embedding space remains the same.

Querying your local documents

Now that you are a bit familiar with embeddings, and have used GPT4All to generate embeddings for the Tricontinental Bulletin text file collection, you can perform a ‘RAG’ and ask questions about the collection!

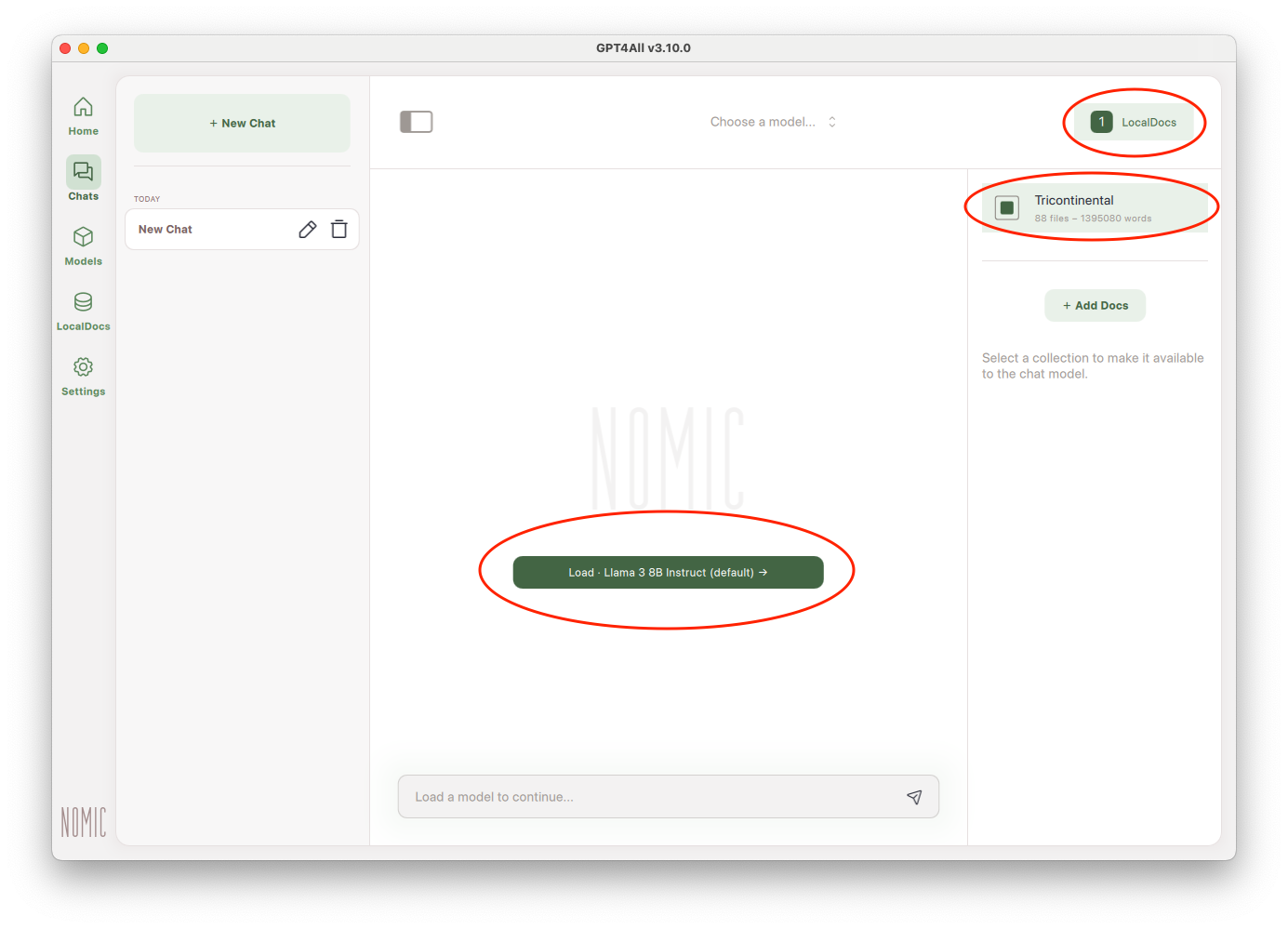

Select ‘Start Chatting’ to switch to the interactive screen. In the top right is the LocalDocs button, which opens a right-hand sidebar. Check the collection(s) you would like to use for this interactive chat session, and then load a language model:

Like the previous chapter, we will just start by treating this chat session like a search engine, and typing some keywords. Type Vietnam and press enter:

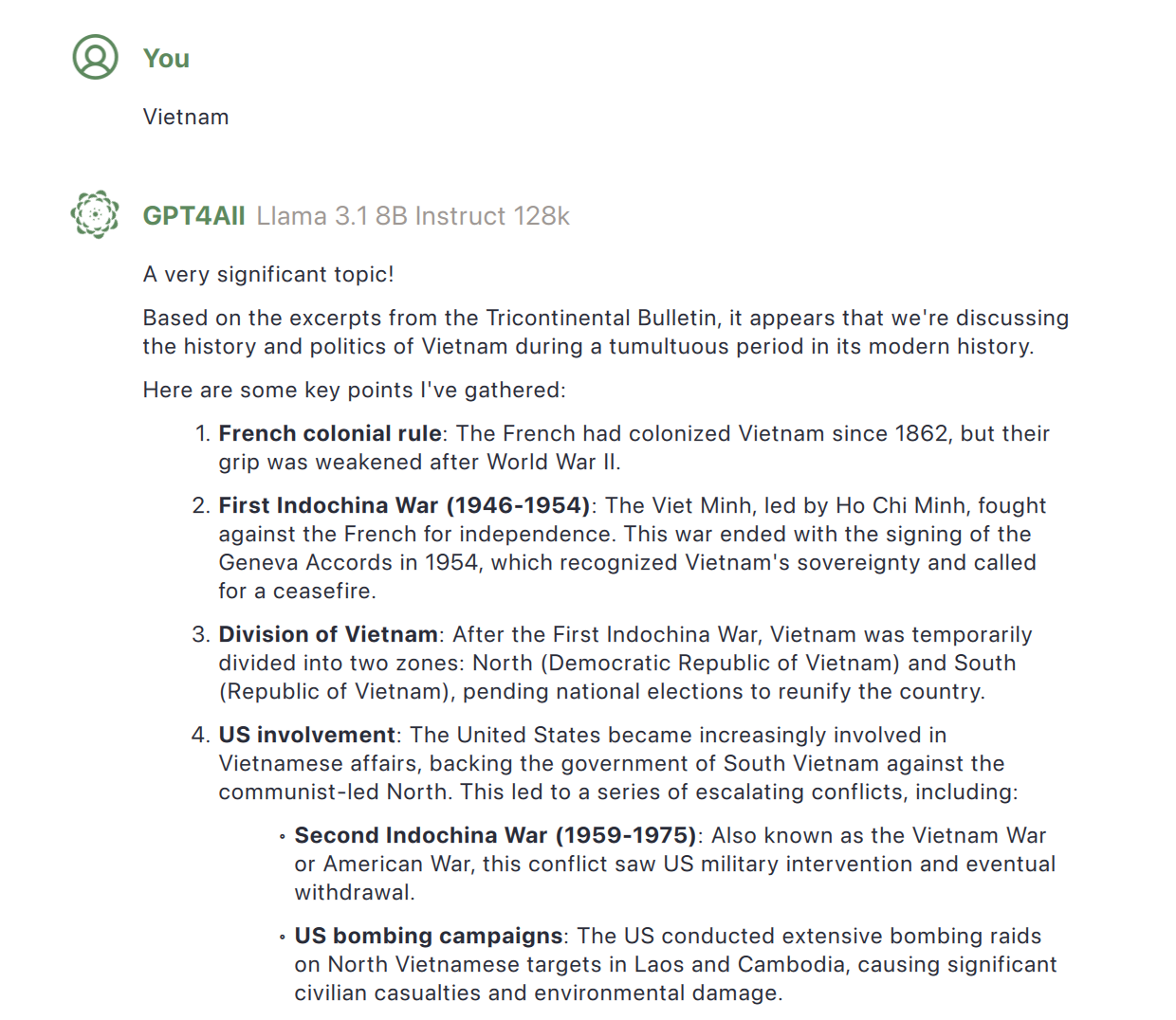

Behind the scenes, the application embeds your query to a vector. It then performs a similarity search for similar snippets from your local document collection, returning document snippets which have similar vectors to your original query. The snippets themselves (not their embedding vectors) are then passed to the LM along with your original query, and the model generates a (fairly lengthy) response:

So, even from what seemed to be a single keyword search, the LM has generated quite a lengthy and detailed response. It’s important to double check the citations it mentions at the end as well, especially if you are using these generated responses for something important – like learning what’s inside your collection! You can click to expand the list of sources, then click again on each source to open the text file.

You’ll also notice that three follow-up questions were generated at the end. GPT4All feeds your conversation back into the model and asks it to generate three related follow-up questions. You can view the prompt used to do this in application settings. The ‘Suggestion Mode’ questions can also be disabled in settings if you prefer to think of your own follow up questions.

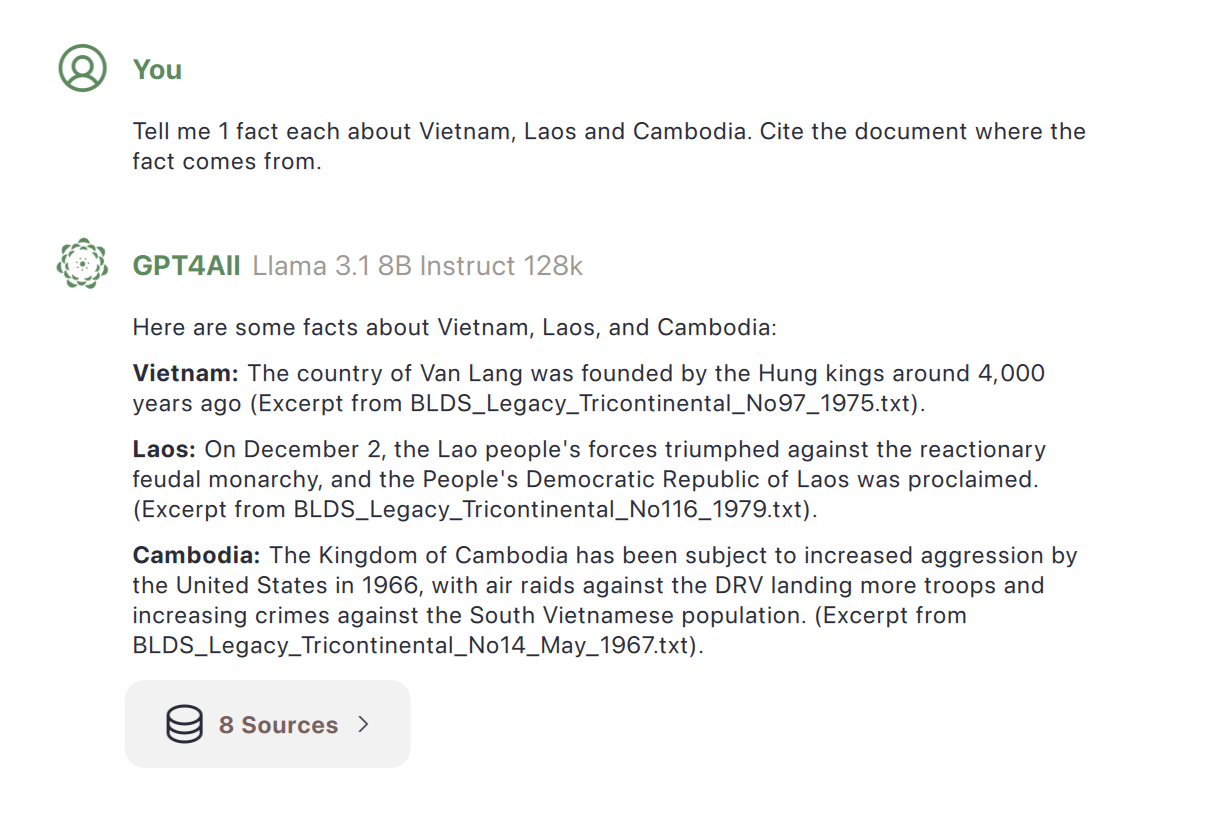

Let’s try another query, but with some natural language instruction about the returned information and format:

There appear to be 8 sources, but the model has correctly only returned 1 fact for each of the countries. To check the cited source documents, we open the relevant file and keyword search to find the relevant paragraph:

The country of Van Lang was indeed founded 4000 years ago. The model even seems to have read between the OCR errors! However, while this is phrased as a pertinent fact, manually looking in the No97_1975.txt document reveals there are other sentences which are less objective and more relevant to the military context of the OSPAAAL:

Colonialism had taken over Vietnamese territory in the second half of the 19th century and, invariably, the uprisings of the people were smashed one after another.

Why did the RAG select that specific sentence? Perhaps if we had asked for ‘1 military fact’ instead of just ‘1 fact’ this other sentence would’ve been used? (Try this prompt variation out for yourself).

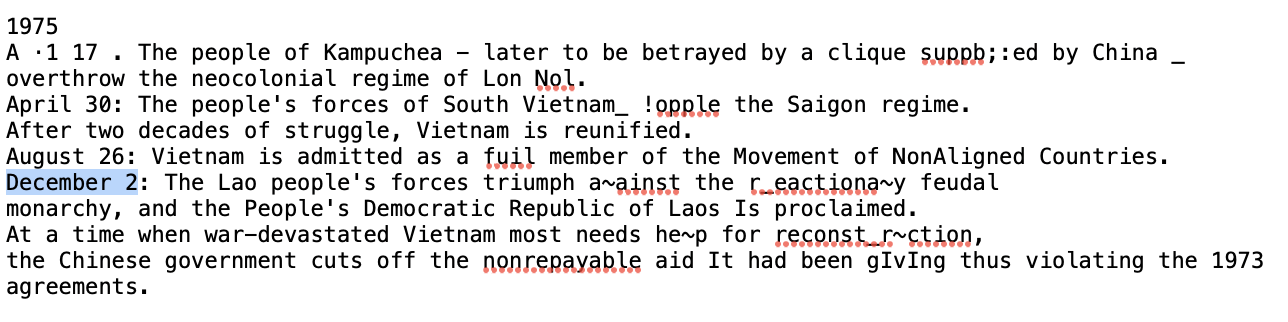

The second fact citation to check is Laos from the cited document BLDS_Legacy_Tricontinental_No116_1979.txt:

This is an interesting example of an almost direct citation. The OCR errors have been corrected and past tense has been used. Does this change the meaning? (Sometimes a model’s ‘character corrections’ or ‘paraphrasing’ can effectively change the meaning of the sentence! This is something to watch for when using RAG technology to search collections.)

There are 6 mentions of ‘Laos’ in the above document. The only one which is phrased factually is the one above. The other 5 mentions, including OCR errors and some context, are below:

In the 1950s, the Chinese rulers drew up a map of their country that included areas of other cou ntri es t hat are now independent. For examp le , Vietnam, Laos, Kampuchea , Burma, Malaysia, Nepa l, Thailand, a part of India and parts of the Soviet Union appeared on this map as parts of Chinese territory.

We are sure t hat th e Peking lead ers will receive another well-deserved blow if t hey attack Laos.

We are sure t hat th e Peking lead ers will receive another well-deserved blow if t hey attack Laos. Now and in t he future, even more than in the past, our people s of Laos, Vi etnam an d Kampuchea are determined to unite to fight and win in order t o frustrate the si nist er maneuve rs of China’s expan sioni st reaction .

Meanwhile, China is now threat ening the people of Laos and has become a perm anent danger to peace in the area an d the world.

Like the peoples of Vietnam and Laos, the Kampuchean people will win .

These are not very ‘factual’ phrases, which suggests that during the retrieval the model has used some internal representation of what a ‘factual phrase’ is, and applied this grammatical aspect as a similarity measure to augment and generate a response. Good to know! Quite a subtle effect, if this is indeed what the model did! (We can only infer this from the observed behaviour).

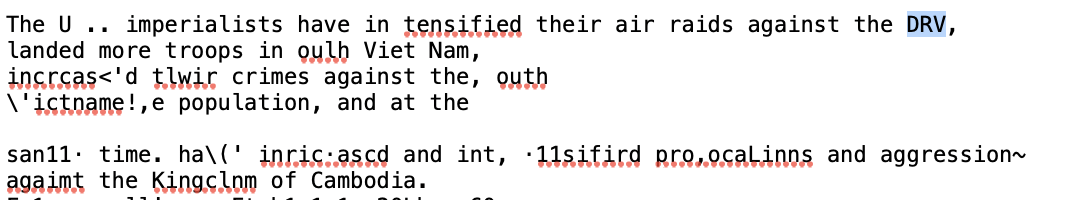

Final fact to check is about Cambodia, from the document BLDS_Legacy_Tricontinental_No14_May_1967.txt:

It is more difficult to pinpoint the exact source sentence in this document, as there are 32 mentions of Cambodia, but by searching for other nouns in the generated response, like ‘DRV’, narrows it down. The OCR is particularly terrible in this example, but again, and surprisingly, the model’s response has regenerated it with what appears to be correct text.

Going deeper

Hopefully by now you have an improved understanding of what RAG is, how to use it, and what to pay close attention to when you do.

When you think of search engines you probably know they somehow indexed your documents and metadata to enable you to search. RAG does something similar: by creating semantic embeddings you are searching what your content means and not just matching relevant keywords. This helps RAG grasp what you’re really asking for, bridging that gap between old-school search and natural language understanding.

Language models have what we call ‘context windows’, basically how much text they can see at once. When you upload a PDF directly into an LLM conversation, the entire document has to squeeze into this limited space. RAG is more selective, pulling only the relevant pieces from your documents when needed. This means you can work with huge document collections without overwhelming the system.

One of the nice things about RAG is that it creates a persistent knowledge base which you can use again and again. Once your documents are processed (i.e. converted to embeddings), the system remembers them across sessions without having to re-analyse everything. This cuts down on network traffic since you’re only retrieving small, relevant chunks when needed—making everything faster and more efficient.

Well-designed RAG systems also help us to keep track of where information comes from. When it tells you something, it can point to exactly which snippet, document, page, or paragraph it was found in. This source tracking builds trust and helps you to verify claims or cite original sources.

Hopefully this chapter has given you hands-on experience with RAG, and some useful ideas and starting points to help you bring this fascinating technology into your own research over the coming years. I can’t wait to see the creative research applications you can come up with!