8 The 1–2-3 Feedback Cycle

Mike Hobbs and Elaine Brown

Introduction

Student engagement covers a variety of different contexts (Healey, 2014) but it is ‘engagement with learning’ that has often been achieved through student-centred approaches that are also used in AL. Engaging students with the content and curriculum is a major topic in education research, policy and practice. The QAA Code on student engagement suggests that although this theme has a long history it is now focused on both quality issues and ‘improving the motivation of students to engage in learning and to learn independently’ (QAA, 2012: 4).

More recently, student engagement has included the idea of students as partners, or co-creators, contributing to what and how courses are assessed as well as being involved in wider aspects of quality and organisation of the institution (Healey et al., 2014). However, to perform this extended role, a student needs good academic literacies and an awareness of assessment processes. Encouraging student contribution to content and inclusion in the assessment process helps build their competence and confidence to engage more deeply with their subject, their course and institution.

Gibbs and Simpson (2004) reviewed the role of feedback and assessment and provide a framework to support learning. In particular, peer review is identified as a way to provide timely feedback and increased ‘time on task’. To provide coherent feedback on other students’ work, students need to understand assessment criteria and must apply their knowledge through analysis and evaluation. Working in groups offers the opportunity for students to share knowledge, co-develop ideas, and improve communication skills (Boud et al., 2001).

The 1–2-3-Feedback Cycle presented in this chapter is based on Gibbs’ (1998) Reflective Cycle. The task we designed uses an authentic case study with genuine analysis and group discussion allowing a range of legitimate answers depending on student interpretations.

The pedagogic context

A simple definition for Active Learning (AL) is given by the UK Higher Education Authority (HEA, 2018) as follows:

A method that encourages student engagement through activity, group discussion, experimentation and role-play, in contrast to the passive memorisation of information (online)

AL is a constructivist educational theory, characterised by the need for students to build (construct) their knowledge. The broad definition of AL can include traditional learning activities such as listening and making notes. However, it is more usual to include higher order thinking tasks such as analysis, synthesis and evaluation (Bonwell and Eison, 1991). AL is associated with student-centred, enquiry/problem/discovery-based learning and a desire to develop learners as well as impart knowledge. Team-Based Learning (TBL) is another example of a student-centred approach credited with improved results and engagement with subject content (Michaelsen and Sweet, 2011). This study shares some aspects of TBL including the use of permanent teams, preparing students for the task, providing a significant problem for students to consider, peer evaluation, and whole class discussion to provide immediate feedback.

These concerns for developing the learner are echoed by organisations such as the UK Centre for Education and Skills (UKCES, 2015) and employer agencies, which put emphasis on the need for better graduate soft skills. Shadbolt (2016) emphasises the urgency in addressing softer and work-readiness skills, to complement the technical skills that will make STEM (i.e. Science, Technology, Engineering, and Mathematics) students more suitable to employers.

The motivation for change

The focus of this study is a 30-credit module, Fundamentals of Design, which students take in their first semester for the BSc Computer Science. This cohort also take the modules, Introduction to Programming, and, Computer Systems, which have quite different pedagogic requirements. Computer Systems focuses on knowing the properties of components and how they work together; programming is a skill that requires understanding, application and practice. However, for both of these, at this level, there is an explicit ‘right way’ or optimum solution to any problem. This way of thinking is reinforced when students are programming, as they receive a form of instant feedback from the compiler (a computer program which translates one computer language into another), which shows basic errors in their code.

Conversely, in the design domain, which is the context for this study, there are many plausible solutions, which need to demonstrate the correct application of the basic principles, but can be different yet equally valid depending on the view of the designer. In industry, practitioners typically start their career with programming and gradually move into more senior roles as they gain the experience required to analyse and design information systems. In Fundamentals of Design, even at this entry level, we need to consider the development of the learner and their higher-level skills as well as the correct application of techniques.

The principal motivation for reviewing delivery of this module was to improve the pass rate and the mean and median marks, and thus improve the student experience. Although student satisfaction, as indicated by the standard institution evaluation survey, was acceptable, it was not particularly high, and free text comments highlighted a lack of understanding about the nature of analysis for design. It seemed that students were focused on easily identified programming skills, but did not appreciate the more nuanced analytical skills required for design thinking, leading to frustration and a lack of deeper understanding of the assessment criteria.

Methodology

The regularly repeated deliveries and the involvement of teaching staff make Action Research (Norton, 2009) a suitable methodology, as it aligns well with the normal review process, and provides a direct link between the research and the improvement of practice. Kemmis et al. (2014) characterised educational action research as spiralling circles of problem identification, systematic data collection and analysis, followed by reflection, data-driven action, and problem redefinition. This study broadly follows this process by splitting the activity into three main phases: the initial state before any specific action had been taken; the state after action had been taken; and the state after a final refinement of the action.

For each phase, analysis of the available data, both quantitative and qualitative, was used to frame the explicit problem to be addressed, followed by a review of the data after the action had been taken, and reflection on the outcomes.

Phase 1 – Initial state and course re-design requirements (prior to 2013/14)

Students achieve the learning outcomes for Fundamentals of Design through analysis and documentation of an information system case study using the industry standard UML (Unified Modelling Language) diagram design language (Rumbaugh et al., 2005). This remains a practical and professionally relevant outcome for the module, so, despite the need to change the delivery, the core purpose and content remained the same throughout the study. The ability to read and write design diagrams are essential skills for a career in computing, and as students are expected to use these techniques in other modules, they need to demonstrate competence for this learning outcome in this module. Contact time consisted of a one-hour lecture and a two-hour seminar/practical class with 20–30 students, in four separate classes. For any given delivery there were three or four teachers: two experienced lecturers and two part-time post-graduates.

The delivery pattern introduced a topic in the lecture, followed by formative exercises carried out in class. The aim was for students to understand, and apply, the analysis techniques represented by five different types of UML diagram. This delivery pattern was supplemented with practical work involving creation of an Access database demonstrating the link between design and implementation. Assessment for the module was through a large, single case study that brought together the analysis and application of five UML diagram types, plus an in-class demonstration of the Access database. The content was supported by material (including lectures, class exercises, and sample answers) and links posted onto a VLE (Virtual Learning Environment) as well as specific readings for each topic from the course book.

A significant problem was that students focused on the final assessment and, to some extent, regarded both lectures and seminars/practicals as optional. Lecture attendance was low, settling around 50 per cent, and it was difficult to get students with an existing, but shallow, knowledge of the topic to engage and develop their skills. This was characterised by superficial assignments that used diagramming conventions such as flow charts, which are regarded as precursors to UML.

Any revised approach needed to help students improve results, gain a deeper understanding of the design ethos, and establish good learning habits for future modules, while maintaining or improving the efficiency of the delivery for staff. As this module is delivered in the first semester of the first year, it needs to support the transition between school-based ‘teaching’ and university-based ‘learning’ environments. The new design introduces responsibility for learning and participation with assessment so that students will be more prepared to be co-creators and full partners in later modules.

The 1–2-3 Feedback Cycle

The re-design of the delivery and assessment process was based on the educational research about feedback and peer evaluation by Gibbs and Simpson (2004). From this guidance we used assessment as part of an AL strategy, increasing ‘time on task’, which was important for students to gain a sufficiently deep view of the subject. This ‘time on task’ was supported with a variety of timely feedback mechanisms to help students assess their subject knowledge, but also provided a template to develop an effective approach to learning. The result was the following weekly cycle repeated five times, once for each of the five kinds of analysis and UML diagram:

- Topic introduced in lecture (Week 1) – including whole audience exercises, Kahoot (2017) quiz sessions and highlighting supporting materials: reading, pod/video casts and external tutorials and videos such as Lynda.com (Lynda, 2017). The assessment for this topic is set for completion by the following week

- Later that week the topic and assessment are discussed in a seminar class, supported by class exercises to practise the concepts – all the assessments are available on the VLE from the start of the delivery

- Work is submitted following the lecture in the week (Week 2) following the introduction of the topic

- Generic feedback – posted immediately after the lecture on the VLE (Week 2). Common issues are identified and addressed through a list of key points and illustrative diagrams

- Detailed Annotated Feedback (provided between lecture and seminar class in Week 2) – Tutor provides detailed annotation on two scripts posted to the VLE for the first peer review class to use as discussion for the tutorial. Having read through the submissions, two scripts are selected for marking, which are re-used for subsequent deliveries as common issues followed a predictable pattern

- Peer Evaluation in the same week as the submission (Week 2) – students review and annotate each other’s work, tutor gives marks for participation and ‘reasonable’ attempt, work is handed back in the next tutorial session (Week 3) with letter grade

Student preparation

The six overlapping stages of the delivery cycle have at their core a simple three-step feedback cycle:

- Generic feedback published to deal immediately with any common errors while the submission is still in students’ minds

- Detailed annotated feedback produced to show what is needed but without providing a ‘correct’ sample answer – used to guide students when they give peer feedback

- Peer evaluation for the topic submitted earlier that week, ensures that each seminar class marks work from a different class, identified only by Student ID number. Groups of three or four students jointly discuss the work before providing individual feedback. During this time the class tutor briefly joins each group to answer questions, give guidance, record who is present, and provide feedback

To prepare students, and help them engage with AL, we introduced the module and explained the purpose of peer review, and the potential advantages of the process. The pedagogic justification was also discussed so that students were aware of the deeper reasons for the change in assessment practice. Gibbs’ (1988) Reflective Cycle was used to help students understand the generic learning skills they needed to gain in addition to subject knowledge.

Figure 8.1 The 1–2-3 Feedback Cycle

Although more commonly used to support reflective writing, the stages of reflection provided a template to help students understand that they needed to develop their learning, as well as their knowledge of the subject. The stages of reflection map onto the stages of requirements analysis, initial design, reflection, design refinement, implementation and evaluation that are at the core of the systems analysis process being taught in the Fundamentals of Design module.

The introduction of the topic included an indication of the type of feedback expected: ‘not just compliments’, ‘constructive criticism’, ‘what was right as well as what was incorrect’, and ‘ways to improve’. And how to behave when working in groups: ‘be open but have respect’, ‘criticise the concept not the person’, ‘assume you may be wrong’, ‘ensure all voices are heard’, and ‘work together to improve the outcome’. These ideas are ‘topped up’ in later lectures with reminders and explanation on how to give and use feedback.

In the first class a detailed marking scheme was provided, and discussed, so that students knew what was expected and how to grade differing levels of work (see Table 8.1 for sample guidance).

The assessment tasks (with marks out of 100):

- Five peer reviewed UML diagrams for the case study (5 marks)

- Demonstration of database design and queries in practical class sessions, feedback and marks given to students following demonstration (30 marks)

- Improved versions of the five UML diagrams based on the case study, submitted at the end of teaching (55 marks)

- A reflective commentary on how feedback was used to improve the work for the final submission (10 marks)

- Final submission to include paper copies of the database queries and all the in-class, peer reviewed diagrams

| Assessment criteria by level | ||

|

DIRECTIVES: Look at how well the case study has been analysed. • Does the diagram use the same vocabulary as the case study? • How well has the syntax been used to represent the case study? • Are inheritance and aggregation used correctly in the diagram? • Are there places where these relationships could have been used? • Are there any classes which are too generic, or ‘system’ classes? • Check the attributes and operations, are these true attributes, or are they values? • Do they belong in this class? • Do the operations change the values of the attributes? • Could they be operations, or are they physical behaviours from the case study that would not be implemented? • Do association relationships demonstrate cardinality? |

||

| Marking standards (by mark band) | Characteristics of student achievement per mark band | |

|

7–10 |

Excellent |

Excellent representation of the case study, excellent use of notation. Making full use of notation where it is appropriate. |

|

6–6.5 |

Good |

Good use of notation, making mostly good use of all types of relationships, with cardinality specified. May be minor errors, such as incomplete cardinality, but diagram demonstrates correct representation of the case study. |

|

5–5.5 |

Satisfactory |

Satisfactory use of notation, using inheritance and aggregation, maybe incorrectly. Likely to have too many classes. Classes maybe mostly correct with inappropriate attributes, or inappropriate operations. |

|

4–4.5 |

Basic Pass |

Basic Class Diagram. Probably too few classes, or classes which are not required by the system (such as a system class), demonstrating a possible lack of understanding of the role of the diagram. Unlikely to have attempted inheritance or aggregation relationships. May also be lacking cardinality. |

|

3–3.5 |

Limited Knowledge |

Limited Use Class Diagram, likely to have a limited number of classes, with no relationships modelled. |

|

0–2.5 |

Inadequate |

Diagram unlikely to have been submitted. |

Student comments included:

‘A use case is meant to be a phrase written with a verb followed by a noun, three use cases have not included verbs.’

‘General understanding is well represented with knowledge of syntax.’

‘There is a use case description format available to view on Chapter 3: Britton and Doake where you can see […]’

Phase 2 – results, reflection and refinement (2014/5, 2015/6)

Results – observations from delivery

The results of the feedback cycle were a noticeable improvement in the quality of the work and depth of analysis. This supports findings from previous studies of peer review (Boud et al., 2001; Dowse et al., 2018) that the knowledge that other students would be looking at their work, even anonymously, improved the presentation of the largely freehand diagrams.

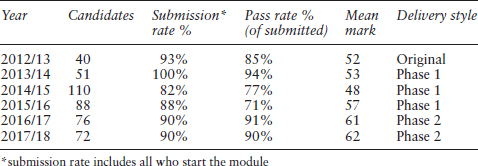

While it is difficult to measure engagement, we saw a decreased rate of non-submissions and an improved pass rate (as shown in the summary provided in Table 8.1); positive module evaluation comments also suggest that students had been focusing more closely on this topic. The ‘time on task’ increased considerably, and providing immediate feedback gave students help when they needed it, to improve their revised submission. The peer review process requires students to justify the marks they give, which also helps develop the same high order judgement skills required by this module.

The results from the first delivery 2013/14 showed an increase in submission rates as well as the pass rate but continued with a modest mean mark (Table 8.1).

Critical success factors learned from first delivery

- Make students aware of the pedagogic process

- Ensure approach and materials are understood by tutors and supporting staff

- Include the student voice – the class seminar discussions revolve around the peer review where student opinions are valued

- Students act as partners within the class environment, making judgements about the validity of solutions and allocating marks in the peer review process

- Academic literacy: clear and continuing instruction to guide students on how to participate in learning activities and what to provide for assessment

- Vary feedback types: Vodcast, PowerPoint, online documents, links to other material, plus group and one-to-one discussions

- Ensure timely feedback so that students can use it

Figure 8.2 is a screenshot of the VLE showing a list of feedback items that had been provided to a student.

Key challenges and issues for the AL approach

Complexity – conveying the concept of AL (despite explicitly including this in lectures and seminars), and the practical details to students, proved to be a constant issue that was reflected in comments and low ratings in the evaluation questionnaire regarding the organisation of the module. The majority of students had no problems but a significant minority, with a poor attendance record, were confused.

Figure 8.2 Snapshot of VLE showing variety of feedback

Management – there were many small, paper-based components during delivery of the module that could easily become lost or overlooked. It quickly became apparent that students were concerned about every single mark, so everything had to be accurately accounted for. A small number of students with late or lost work, or other exceptional circumstances, caused a disproportionate increase in administration.

Assessment reward – getting the balance right between work and reward. The original concept was that the in-class element would be an initial ‘rough copy’ to be refined by the peer review and feedback process. However, students were putting considerable effort into this work. It seemed that the balance of marks did not take sufficient account of this and over-rewarded small improvements, which could be derived easily from the feedback given.

Contingency planning – students had to attend all relevant lectures and classes to fully participate in the feedback cycle. This made these sessions ‘worthwhile’ from a student perspective, but made it difficult for those who missed sessions.

Staff workload

The improvements in the results did not mean more work for staff. A key consideration was to make the learning materials and process clear and easy to deliver for all staff, so that the quality of the module did not depend on the performance of one remarkable tutor.

In the original delivery, the tutor provided detailed feedback on a large and complex piece of work, which took approximately 24 hours for 56 scripts, and the student gained feedback at the end of the delivery when there was no opportunity to utilise this in their work.

In the new delivery, the tutor spent eight-and-a-half hours preparing two batches of five sets of detailed feedback, equivalent to two old scripts, to create the detailed annotation. Additionally the tutor would spend time preparing generic feedback, but by re-using material, preparation times can be significantly reduced in future deliveries. With practice, time saved in subsequent deliveries allowed provision of additional resources, including explanatory video clips, rather than just marking large numbers of scripts.

Students now have feedback equivalent to four old-style scripts. More importantly, each student sees up to four examples of the five diagrams and, as well as providing their own work, gives feedback on another five diagrams.

The feedback for the revised diagrams used the peer review marking rubric, which also allowed individual comments to be inserted, precluded the need for extensive feedback as this had been delivered during the module.

Assessment refinement

Submission and marking

The database task is used as an illustration of how a design can be turned into a working system, more as a prototype to check the logic than a serious implementation. As such, it is peripheral to core content and is dealt with in a second year compulsory module. Tasks remained the same but guidance reduced the emphasis on theoretical knowledge and marks were decreased from 30 per cent to 10 per cent.

Marks for the in-class peer review were increased from 5 per cent to 20 per cent for the five diagrams (four marks each). This allowed an element of fine grading: one mark for participation, one mark for work that was significantly wrong but showed some understanding, two marks for work that had errors but showed understanding of the technique, and full marks awarded for work that was largely correct but may still have some errors. The aim of this revised scheme was to reward learning and participation rather than the specific output.

The revised, ‘neat’, diagrams were submitted to Turnitin, which gave staff an opportunity to check that students were still engaged, and that they understood the electronic submission process.

Ten per cent of the total mark was allocated for submission of the ‘neat’ diagrams. This was regarded as high enough to encourage submission but not so high that it over-rewarded a relatively simple task. The main point of this step was that if students failed to submit, or performed poorly, they would be able to recover and could be identified easily for extra support before the final submission.

The final assessment mirrors the tasks and techniques of the previous assessment but applied to a different case study. This means that feedback for the in-class case study cannot be repeated ‘parrot fashion’ back for the final assessment but must be applied to a different situation. As the final assessment case study is now a substantial and definitive component for the module, it attracts a significant mark of 60 per cent. Submission was online via Turnitin and the rubric was still used to justify the marks, but free text comments and a library of re-useable comments (‘quick marks’) for repeated errors were used to give feedback on the scripts.

Assessment complexity and management

After repeated deliveries, staff were able to avoid issues that had occurred previously and could provide examples for students. Three assessment types were described to students: ‘rough, ‘neat ‘, ‘applied’. A ‘rough’ design is typically hand-drawn, fluid, flexible, discussed and improved through peer review. A ‘neat’ submission gave students the chance to demonstrate what they had learned from feedback. The ‘applied’ submission demonstrates how students can use their learning in different situations. The changes to the submissions, and move to electronic submission and marking, also made it much easier to manage.

Content presentation on the VLE was also redesigned so that all materials, exercises, and feedback for a particular week were shown as part of a single web page rather than having different areas for lecture materials and homework. This provided a ‘one-stop shop’ for students who could highlight significant dates, processes, and content.

Contingency planning

As a first year, first semester module, students need to be able to make mistakes as they take more responsibility for their learning than they may have been used to at college or school. This is acknowledged by the ARU academic regulations, which require first year modules to be passed, but which do not contribute to the final mark. With its many components, Fundamentals of Design module is a challenge for some and, once a single assessment has been missed, it is easy for this to become a negative experience that demotivates the student. However, there needs to be a clear demarcation between those who contribute to a class and those who, for whatever reason, do not. There is a fine balance between rewarding performance and motivating engagement and improvement. Our clearly stated ‘contingency planning’ policy was that if a student misses a submission they can submit the work via email, as long as it arrives before feedback is published, and will receive brief feedback, but with a maximum of half the available marks. The idea is to reward attendance but allow some flexibility without causing a critical drop in marks.

Phase 3 – Results and reflection (2016/7, 2017/8)

The changes outlined in assessment refinement (above) have now been run in both 2016/17 and 2017/18. Data from all deliveries is provided in Table 8.2. Although there is a gradual increase in the mean mark of work submitted, there was a set-back in 2014/15, when student numbers doubled.

Table 8.2 Results for Fundamentals of Design

The submission rate and pass rate for 2012/13 and 2013/14 were only calculated for students who completed the module, rather than all who started it. These data are not comparable to other data as they do not include students who dropped out during the course, thus underestimating the total number and overestimating the pass rate and mean mark.

Conclusion

The learning and assessment activities described in this study help the transition between the student as passive receiver of knowledge, and taking a more active role in all aspects of their learning. For some students, the change in emphasis is unsettling and, initially, they find it hard to trust their own, or other voices, that are not endorsed by the tutor. As well as delivering knowledge of a subject, each module in a course needs to develop some aspect of the student as an independent learner or practitioner.

Student involvement in the assessment process also allows teaching staff to focus pro-actively on supporting students in an AL context, rather than the traditional passive delivery and post-hoc evaluation. We have also incorporated student input into the assessment process through peer review. This increases student assessment literacy and helps to provide a mechanism for meaningful input into content and curriculum development.

Changes to the assessment increased students ‘time on task’ and provided a deeper challenge as they still learn from the exercises and feedback, but now have to demonstrate their understanding by applying these principles to a new case study. The concepts to be learned have remained the same, but the level and depth of learning has increased without a significant increase in workload for students or staff. This qualitative improvement is somewhat hidden by the quantitative results for the module, as marks are typically allocated to the degree of attainment for a given learning task, but the same relative performance for an easier task would give the same result. However, based on the overall level of submissions, total engagement has improved, and mean marks for submitted work have also improved.

The action research process provided a framework for continuous analysis of module delivery, and helped to align the curriculum to represent, and change, student views and expectations. The key mechanism for this has been the use of feedback in an AL context. Although based on the development history of a single module, the generic lessons learned are clearly applicable to other disciplines.

In this module, at the start of their experience of a university education, we have attempted to effectively teach the subject but in doing so have also provided a template for independent study. We provided scaffolding for students to develop good learning practice by getting students to expect and use feedback as an integrated part of their learning, spread throughout the delivery of a module.

References

Bonwell, C.C. and Eison, J.A. (1991) Active learning : creating excitement in the classroom, School of Education and Human Development, George Washington University. Online. https://eric.ed.gov/?id=ED336049 (accessed January 23 2018).

Boud, D., Cohen, R. and Sampson, J. (2001) Peer Learning in Higher Education : Learning from and with each other, Kogan Page.

Dowse, R., Melvold, J. and McGrath, K. (2018) ‘Students guiding students: Integrating student peer review into a large first year science subject. A Practice Report’. Student Success, 9(3), 79. Online. https://studentsuccessjournal.org/article/view/471 (accessed September 7 2018).

Gibbs, G. (1988). Learning by Doing: A Guide to Teaching and Learning Methods. Oxford: Oxford Further Education Unit

Gibbs, G. and Simpson, C. (2004) Conditions under Which Assessment Supports Students’ Learning. Learning in Teaching in Higher Education, 1(1), 3–31. Online. https://www.open.ac.uk/fast/pdfs/GibbsandSimpson2004–05.pdf (accessed January 23 2018).

HEA (2018) Active Learning. Online. https://www.heacademy.ac.uk/knowledge-hub/active-learning (accessed March 27 2019)

Healey, M., Flint, A. and Harrington, K. (2014) Engagement through Partnership: Students as Partners in Learning and Teaching in Higher Education. Online. https://www.heacademy.ac.uk/system/files/resources/engagement_through_partnership.pdf (accessed January 23 2018).

Kemmis, S., McTaggart, R. and Nixon, R. (2014) ‘Doing Critical Participatory Action Research: The ‘Planner’ Part’. In The Action Research Planner. Singapore: Springer Singapore, 85–114. Online. http://link.springer.com/10.1007/978-981-4560-67-2_5 (accessed January 24 2018).

Michaelsen, L.K. and Sweet, M. (2011) ‘Team-based learning’. New Directions for Teaching and Learning, 2011(128), 41–51. Online. http://doi.wiley.com/10.1002/tl.467 (accessed January 24 2018).

Norton, L. (2009) Action research in teaching and learning : a practical guide to conducting pedagogical research in universities, Routledge.

Oxford Brookes University. (2013) ‘Reflective Writing: About Gibbs reflective cycle’. Online. Oxford Brookes University, 1–4. https://www.brookes.ac.uk/students/upgrade/study-skills/reflective-writing-gibbs/ (accessed January 25 2018).

QAA. (2012) Chapter B5: Student Engagement. UK Quality Code for Higher Education. Quality Assurance Agency for Higher Education QAA. Online. http://www.qaa.ac.uk/publications/information-and-guidance/uk-quality-code-for-higher-education-chapter-b5-student-engagement (accessed January 23 2018).

Rumbaugh, J., Jacobson, I. and Booch, G. (2005) The Unified Modelling Language Reference Manual, Addison-Wesley.

Shadbolt, N. (2016) Shadbolt review of computer sciences degree accreditation and graduate employability, Department for Business, Innovation and Skills and Higher Education Funding Council for England, London. Online. https://www.gov.uk/government/publications/computer-science-degree-accreditation-and-graduate-employability-shadbolt-review (accessed January 23 2018).

UKCES. (2015) Reviewing the requirement for high level STEM skills. UK Commission for Employment and Skills.