Re-igniting the personal touch: Using video to improve feedback with Foundation Year students

Wendy Garnham and Heather Taylor

Outline

Feedback is the one factor that is paramount to student outcomes (Hattie, 2009). Yet in recent years, it has become clear that this same factor is consistently rated lower than others in National Student Surveys (HEFCE 2010, http://www.hefce.ac.uk/learning/nss/). The reasons for this lower rating appear to be multifactorial and include factors such as difficulty in understanding the meaning of written comments in relation to assignments, a lack of advice about how to feed-forward with improvements and a focus on spelling and grammar which Duncan (2007) refers to as the mechanical aspects of a task.

It is not just students that have raised dissatisfaction with feedback processes. As student numbers increase, so the opportunity for tutors to interact with and support individual students becomes limited. As Carless, Joughin and Liu (2006) identified, tutors often complain about the time-consuming nature of feedback. Such concerns could have implications for the timing of when feedback is released, the detail given in feedback and the frequency of feedback, all of which are included in Gibbs and Simpson’s (2004) outline of the six key drivers of positive and effective feedback.

These concerns have led to a focus on the use of alternative modes of feedback such as the use of audio feedback. Merry and Orsmond (2008) and Ice et al. (2007) both report positive benefits of using this, for example, Merry and Orsmond’s participants reported that with audio feedback, the use of intonation was useful in helping them understand the content of the feedback better and Ice et al. found that audio feedback led to a feeling of increased involvement and greater retention of content than written feedback. Ice et al used auditory feedback to try and create a sense of community amongst online learners. Student satisfaction for audio feedback was reported to be “extremely high” with students perceiving it to be associated with the tutor caring more about the student. Ice et al suggest that the effect of using audio feedback was to increase both feelings of involvement and community interactions.

Ice et al’s findings have been replicated elsewhere. For example. Gould and Day (2013) found audio feedback to be seen as more personalized and supportive than written feedback as well as more detailed. Even in terms of accessing the feedback, Lunt and Curran (2010) suggest that students are at least 10 times more likely to open audio files compared to collecting written feedback.

However, tutors often have mixed feelings about audio feedback as demonstrated by Cavanaugh and Song (2014) in their study of online learning for a composition course. Even though students were positive about the use of audio feedback, mentioning the ability to pick up cues from the tutor’s voice, paying closer attention to the content and the increased level of detail used, tutors raised concerns about the level of clarity in their audio comments, technological challenges and the difficulty for students in locating specific areas referred to in the text.

On closer inspection, even student attitudes towards audio feedback are not completely positive. In Gould and Day’s (2013) study, one third of their sample stated a preference not to have audio feedback for any of their work. Similarly, McCarthy (2015) compared audio, written and video feedback in a digital media cohort and reported that only 12% of his sample stated a preference for audio feedback. Rodway-Dyer,Knight and Dunne (2011) used audio feedback with first year Geography students. Although the majority of students felt that audio feedback provided a useful experience and contained more detail than written feedback, focus group responses accentuated the negative aspects such as the feeling of being told off. Chiang (2009) similarly found that audio feedback was rated as the least favourite method of feedback. One of the reasons stated is that audio feedback lacks a visual component which can impact on the efficacy of the feedback. Mayer (2001) maintains that a combination of visual and auditory feedback is optimal. Mayer’s dual coding hypothesis suggests that when words are presented auditorily alongside pictures, learners can integrate these more easily due to separate processing systems for auditory and visual information in working memory (Mayer & Moreno, 1998). Video feedback, particularly in the form of screen-casting addresses this issue and 66% of McCarthy’s participants stated a preference for this.

The use of screen-casting as a means of giving feedback has since proliferated (E.g. Ali, 2016; Mayhew, 2016; Thomas, West & Borup, 2017; Harper, Green & Fernandez-Toro, 2018). Benefits reported include improved communication (Cranny, 2016), improvements in quality of writing (Moore & Filling, 2012) and improvements in learner engagement (Hynson, 2012). Bakler (2017) argues that screencast feedback allows teachers to get engaged in dialogue with students and this promotes comprehension and engagement. As with audio feedback, benefits claimed for the use of screen-casting feedback include an increase in student engagement (Mathisen, 2012), efficiency in marking (Brick and Homes, 2008) and detail (Bakler, 2017). Harper, Green and Fernandez-Toro (2018) used screencasting to give feedback to online language learners. The authors were particularly struck by the affective impact of giving feedback in this way with positive emotional consequences reported for both tutor and student. Not only did screen-casting enable tutors to feel that they were engaged in meaningful dialogue with their students, but students also felt that the feedback was more personal and their work more valued. The importance of creating dialogue in feedback has been raised previously by Nicol (2010) and similarly Harper et al argue that the personal dimension is an essential underpinning of the effective use of feedback by students. Price et al (2010) argue that the relationship between tutor and student is entirely critical to the process.

One potential limitation with the majority of studies using screen-casting is that the video feedback given to students shows only the submitted assignment with accompanying audio. For example, in McCarthy’s comparison of audio, video and written feedback, the screen-casting used for video feedback contained narrated, visual feedback but no image of the tutor themselves giving this in real-time. Similarly, Thompson and Lee (2012) used screen-capture to show the submitted assignment with audio narrative but no image of the tutor themselves. Harper, Green and Fernandez-Toro (2018) similarly displayed the text only with audio on the screencast.

Where an image of the tutor themselves has been shown, this has been received positively. For example, in Mayhew’s (2016) study of video feedback, students were able to see their submitted assignment on the left of the screen and a video of their tutor giving feedback on this. Mayhew states this was to ensure that the feedback was both detailed and personal. 90% of participants preferred the video feedback to written feedback and 72% gave positive feedback regarding the inclusion of the tutor’s face in the video. The majority of students (78%) also said that the video feedback prompted them to revisit the subject material more than they thought written feedback would have done. Thomas, West and Borup (2017) also used a webcam for tutors to give feedback. They reported a tendency for instructors to use more humour, to self-disclose more and to compliment students more in video feedback.

One of the limitations of this research is that there is little attempt to directly compare students’ perceptions of traditional written feedback, screen-cast feedback with audio and video feedback showing the tutor directly. The Feedback Project compares these three different modes of giving feedback: the traditional written feedback approach, the use of screencast feedback where the tutor is not shown (we will refer to this as the Script Video condition), and the use of screencast feedback where the student can see the tutor giving the feedback in real-time (which we refer to as the Tutor Video condition) . It was anticipated that the physical presence of the tutor in the feedback video would enhance the personalization of the feedback and lead to greater student satisfaction with the feedback.

The “How to” Guide

- Open the student’s script on your screen.

- In a separate tab, sign in/register to zoom: http://.zoom.us

- Select “Host a meeting” from the top left hand side of the screen.

- You can select whether you want the video image of yourself to be shown or not using the “Video on” and “Video off” options.

- You will then be asked to join the meeting and should see your picture on the screen.

- To share the screen showing the student’s work, click “Share screen” option at the bottom of the window and select which one you want to use.

- You will then see a little box with your picture in it on the top right of the screen and the student’s script on the main screen.

- If you click the option at the top of the screen that says “More” you will see the record option there. When you are ready click this and it will record both the student’s script and your video feedback.

- If you want to annotate the script as you go, there is the option to do that also at the top of the screen. You can highlight or mark the script as you go.

- When you have finished, the recording will save as an MP4 file which you can then upload or forward to the student.

How we used this

87 participants from a cohort of 192 Foundation Year students, studying on an Occupational, Social and Applied Psychology module took part. Of the 87 participants who gave consent to take part, 19 were male and 68 were female. This was representative of the gender balance in the cohort. Participation in the Feedback Project was optional and did not carry any course credit.

Participants were expected to complete an independent piece of research as part of their coursework. All students were asked to submit three assignments during the course of the Spring Term relating to this, two of which were formative assignments and one of which was a summative assignment. For one of these assignments, traditional written feedback would be given, for a second, a screen-cast video showing the script being marked in real time with audio feedback was given and for a third, a video showing the script being marked in real-time as well as a video of the tutor themselves marking it, was given.

A free video technology resource called Zoom (http://zoom.com) was used to record both the Script Video and Tutor Video modes of feedback. Written feedback was provided using the standard Turnitin software traditionally used for marking electronically submitted assignments. An example of the survey used to collect student opinions can be found in Appendix 1.

Formative Assignment 1

The first formative assignment required students to complete a research question analysis. Students were asked to complete a template which required them to create a research question from a limited set of themes relevant to the module and to explain their thinking about how they might test this.

Formative Assignment 2

The second formative assignment required students to produce an annotated bibliography, showing evidence of wider reading as well as demonstrating their ability to reference correctly.

Summative Assignment

The summative assignment required students to submit a lab report using APA style conventions. The lab report should be an account of their own independent research, what they did, what they found and how it related to the existing literature in that area.

Students were informed in the first seminar of the Spring Term about the Feedback Project aims and were given the opportunity to participate in the project, giving them the full ‘360 degree’ experience of research, playing both the role of participant in the project and as a researcher in their own independent research. Those who wished to take the opportunity to participate were asked to complete a consent form on Google Forms.

Before each assignment was due, the tutor provided an exemplar of the work required using the Feedback Project as the focus. Participants could then use this to guide their own production of a similar piece of work using their own independent research as the focus.

In giving feedback on each assignment, the tutor either used Turnitin to give traditional written feedback (written condition), used Zoom to record audio feedback with a video of the script shown (script video condition) or used Zoom to record both audio feedback with a video of the script shown and a video of the tutor marking the script in real-time (tutor video condition).

One week after the feedback had been received, participants were asked to complete a survey on Google Forms to record their experience of the feedback. Ethical approval was granted by the University of Sussex Research Ethics Committee: Ref: ER/WAG23/2.

The Successes (what worked well)

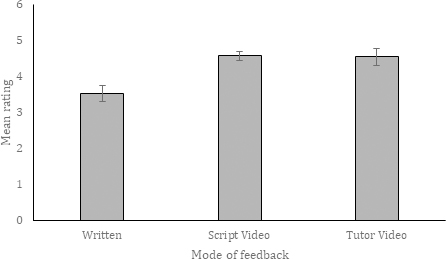

The video feedback was seen as more personal than the written feedback.

Regardless of whether the tutor was part of the video or not, students reported that the video feedback was more personal than the traditional feedback as figure 1 illustrates. Traditional written feedback was seen as significantly less personal than either of the video types of feedback: X2(2)=13.42, p<.001.

The majority of qualitative responses were also positive regarding the use of video feedback (both Script Video and Tutor Video) with participants highlighting how video feedback was more engaging than written feedback and forced them to actively listen rather than skim over the feedback. E.g.

“Easy to understand, more engaging than written feedback”

“It is very personal and it does help in paying attention and actually forces you to think about the feedback given.”

Many students pointed to the personal, specific nature of the feedback given in the Tutor Video condition as a positive aspect:

“It’s more personal as you hear the person’s voice and it’s like they are talking to you directly.”

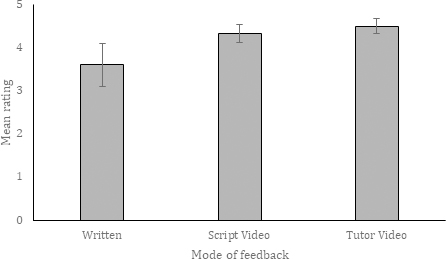

At time-point 2, written feedback was again perceived to be more personal than the video feedback (Written: M=3.6, SD=1.14, Script Video: M=4.33, SD=0.52, Tutor Video: M=4.50, SD=0.65). Although this suggests a similar trend to that seen at time-point 1, at time-point 2, this effect failed to reach significance: x2(2)=3.50, p>17. As shown in Figure 2, this could be due to the larger variation in ratings given by those who received the written feedback.

Video feedback was reported to be more inclusive than traditional written feedback

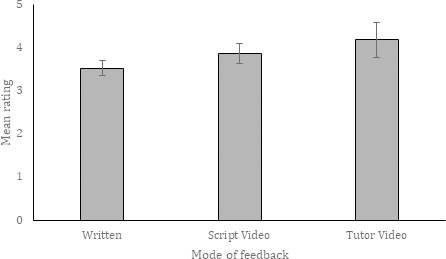

Video feedback with the tutor present was rated as the most liked (Written: M=3.52, SD=0.81, Script Video: M=3.86, SD=0.86, Tutor Video: M=4.18, SD=1.33), although the difference in ratings of liking for each mode of feedback did not reach significance X2(2)=5.091, p>.078. Figure 3 illustrates this trend.

This was also the case at Time Point 2, where there was a tendency for the Tutor Video feedback to be liked more than the Script Video or Written feedback (Written: M=6.0, SD=3.08, Script Video: M=6.50, SD=3.08, Tutor Video: M=6.93, SD=2.37). However, as at time point 1, these differences were minimal and did not reach significance: X2(2)=0.338, p>.844.

In the video conditions students reported that the nature of the feedback made them feel valued:

“It makes the students feel valued that you’ve taken the time to give a personal response than feels very similar to a 1:1.”

This was particularly true for students who traditionally found it difficult to engage with written feedback:

“I think if you are a slow reader like I am, it helps to listen to things.”

The video feedback did appear to increase participants’ engagement with the material also. Many students pointed to the way it made it difficult for them to skim over the feedback and instead forced them to really think about what was being conveyed. Bakler (2017) and Mayhew (2016) both reported similar effects using video based feedback.

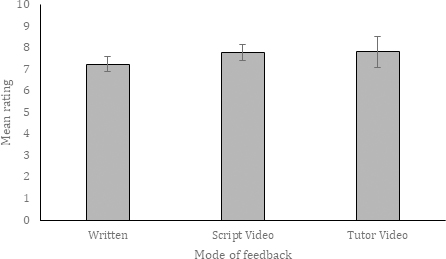

Students found feedback to be useful in all conditions.

In terms of usefulness, there was little difference in the ratings given in any condition (Kruskal-Wallis: x2(2)=3.34, p>.188. Students found the feedback to be similarly useful in all conditions.

Figure 4: Mean ratings of usefulness for the three different modes of feedback at Time-Point 1 (+/-1SEM)

This was also replicated at time-point 2: (X2(2)=0.133, p>.94. There was a lot more variability in ratings at time-point 2 which could be due to the different nature of the assignment being marked at this point.

The finding that usefulness did not differ according to the feedback type is important as it suggests that video feedback is valued not because it is any more useful but because it adds something to the experience of students that is lacking with written feedback.

The Unexpected Difficulties

Making feedback more personal carries with it a risk!

Video feedback, whether it included just the script being marked in real-time or whether the tutor was included in the video, was rated as significantly more personal. However this was not always experienced positively. One participant in particular, in their qualitative response, suggested that the feedback was experienced as being more direct in a negative way.

“…hearing someone tell you it’s wrong feels worse than when you see it written down.”

Perhaps more so with video feedback than with traditional feedback, it is important to emphasise the strengths of a student’s work as much as the aspects that need to be improved.

Video feedback is reliant on technology

Where negative responses were given, these tended to focus on technological issues such as difficulty in hearing the commentary or not being able to print out the feedback. One of the issues with student’s printing out feedback is that this, in some cases, constitutes the level of engagement with it and often little processing of the specific feedback message occurs. Some participants complained about having to re-watch the feedback a lot although as a tutor, this could be seen as a positive!

Survey participation is always a challenge

At the first time-point, 47 of the 87 (54%) participants who completed the consent form also completed the survey. This dropped to 26 of the 87 (30%) participants by time-point 2. The Feedback Project was always intended as an optional opportunity for students to get involved in to enable them to gain experience as both a participant and then later as researcher conducting their own research. As such it is disappointing but perhaps not surprising that only 54% of the cohort chose to participate in the project, with only 30% of these completed the survey at time-point 2. The timing of the survey here coincided with the preparation week for the summative assignment so it is possible that this may explain the low return on the survey and it is something that will need to be considered in future developments of the project.

This works for shorter feedback assignments only

Time-point 3 corresponded to the summative assignment feedback. Unfortunately this time-point had to be abandoned due to technical issues. To make videos of feedback for the longer summative assignments would either lead to the creation of very large files which students would not be able to download easily or would lead to an increase in time spent marking for tutors who would have to mark the assignment, then prepare a script short enough to lend itself to video recording. Video feedback (of both types) was considerably less time-consuming that written feedback to prepare but this was only the case when the assignment to be marked was relatively brief. When the assignment was lengthy and involved commentary on a number of different component parts, the video feedback became more time-consuming and would have required a substantial extension of marking time for feedback scripts to be prepared and edited to enable short and easily accessible videos to be delivered to students. As this was counter to the aims of the Feedback Project, it was decided to focus on the analysis of time-point 1 and 2 only. It was unfortunate that technical issues prevented the delivery of video feedback at time-point 3 and this in itself raises an important issue about the use of video technology for this purpose. As Carless et al (2016) suggested, the time taken to provide feedback is a major concern that has implications for the detail in and frequency of feedback as well as the timely delivery of it.

Concluding Thoughts

The Feedback Project was an attempt to explore the effectiveness of feedback presented either in a written form, in a video showing the script with audio accompaniment or in a video showing both the script and a video of the tutor marking the script in real time. Video feedback was seen as significantly more personal than traditional written feedback but there did not appear to be any particular advantage gained from including a video of the tutor marking the script as part of that feedback. Simply having a video of the script being marked in real-time was sufficient for the feedback to be seen as more personal. This lends support to the findings of Harper et al (2018) who also found video feedback to be perceived as more personal. If this is indeed the essential underpinning of effective use of feedback as Harper and colleagues suggest, then it offers a useful insight into how to promote engagement with feedback in future assignments.

Acknowledgements

With many thanks to the Technology Enhanced Learning team at University of Sussex for their help in using Zoom to record the videos.

References

Ali, A.D., 2016. Effectiveness of Using Screencast Feedback on EFL Students’ Writing and Perception. English Language Teaching, 9(8), pp.106-121.

Bakla, A., 2017. Interactive Videos in Foreign Language Instruction: A New Gadget in Your Toolbox. Mersin University Journal of the Faculty of Education, 13(1).

Carless, D., Joughin, G. and Liu, N.F., 2006. How assessment supports learning: Learning-oriented assessment in action(Vol. 1). Hong Kong University Press.

Cavanaugh, A.J. and Song, L., 2014. Audio feedback versus written feedback: Instructors’ and students’ perspectives. Journal of Online Learning and Teaching, 10(1), p.122.

Chiang, I.A., 2009. Which Audio Feedback is Best? Optimising Audio Feedback to Maximise Student and Staff Experience. In A Word in Your Ear conference, Sheffield, UK. http://research. shu. ac. uk/lti/awordinyourear2009/docs/Chiang-full-paper. Pdf.

Duncan, N., 2007. ‘Feed‐forward’: improving students’ use of tutors’ comments. Assessment & Evaluation in Higher Education, 32(3), pp.271-283.

Gibbs, G. and Simpson, C., 2005. Conditions under which assessment supports students’ learning. Learning and teaching in higher education, (1), pp.3-31.

Gould, J. and Day, P., 2013. Hearing you loud and clear: student perspectives of audio feedback in higher education. Assessment & Evaluation in Higher Education, 38(5), pp.554-566.

Harper, F., Green, H. and Fernandez-Toro, M., 2018. Using screencasts in the teaching of modern languages: investigating the use of Jing® in feedback on written assignments. The Language Learning Journal, 46(3), pp.277-292.

Hattie, J., 2009. The black box of tertiary assessment: An impending revolution. Tertiary assessment & higher education student outcomes: Policy, practice & research, pp.259-275.Hynson, Y. T. A. (2012). An innovative alternative to providing writing feedback on students’ essays. Teaching English with Technology, 12(1), 53–57.

Ice, P., Curtis, R., Phillips, P. and Wells, J., 2007. Using asynchronous audio feedback to enhance teaching presence and students’ sense of community. Journal of Asynchronous Learning Networks, 11(2), pp.3-25.

Lunt, T. and Curran, J., 2010. ‘Are you listening please?’The advantages of electronic audio feedback compared to written feedback. Assessment & evaluation in higher education, 35(7), pp.759-769.

Mathisen, P., 2012. Video feedback in higher education–A contribution to improving the quality of written feedback. Nordic Journal of Digital Literacy, 7(02), pp.97-113.

Mayer, R.E., 2002. Multimedia learning. In Psychology of learning and motivation (Vol. 41, pp. 85-139). Academic Press.

Mayer, R.E. and Moreno, R., 1998. A split-attention effect in multimedia learning: Evidence for dual processing systems in working memory. Journal of educational psychology, 90(2), p.312.

Mayhew, E., 2017. playback feedback: the impact of screen-captured video feedback on student satisfaction, learning and attainment. European Political Science, 16, pp.179-192.

McCarthy, J., 2015. Evaluating written, audio and video feedback in higher education summative assessment tasks. Issues in Educational Research, 25(2), p.153.

Merry, S. and Orsmond, P., 2008. Students’ attitudes to and usage of academic feedback provided via audio files. Bioscience Education, 11(1), pp.1-11.

Moore, N.S. and Filling, M.L., 2012. iFeedback: Using video technology for improving student writing. Journal of College Literacy & Learning, 38, pp.3-14.

Nicol, D., 2010. From monologue to dialogue: improving written feedback processes in mass higher education. Assessment & Evaluation in Higher Education, 35(5), pp.501-517.

Price, M., Handley, K., Millar, J. and O’donovan, B., 2010. Feedback: all that effort, but what is the effect?. Assessment & Evaluation in Higher Education, 35(3), pp.277-289.

Rodway-Dyer, S., Knight, J. and Dunne, E., 2011. A case study on audio feedback with geography undergraduates. Journal of Geography in Higher Education, 35(2), pp.217-231.

Thomas, R.A., West, R.E. and Borup, J., 2017. An analysis of instructor social presence in online text and asynchronous video feedback comments. The Internet and Higher Education, 33, pp.61-73.

Thompson, R. and Lee, M.J., 2012. Talking with students through screencasting: Experimentations with video feedback to improve student learning. The Journal of Interactive Technology and Pedagogy, 1(1), pp.1-16.

Appendix 1

A copy of the survey given to participants at Time-Point 1.